High fidelity sensor models are critical for testing and validation of perception systems. The availability of modern ray tracing APIs enables higher fidelity sensor simulation without sacrificing performance. This covers obvious domains such as photon traversal for lighting and reflections (multipath), but also applies to modeling artifacts such as the rolling shutter in a spinning lidar or interference generally present in active sensors.

Vulkan is a new low-level graphics API on Linux and Windows that supports efficient multi-threaded rendering, ray tracing, and overall increased throughput compared to older APIs. It uses the latest GPUs efficiently and improves graphical fidelity and performance by removing bottlenecks and enabling more direct access to the GPU. At Applied Intuition, we are developing on top of Vulkan rendering capabilities to take advantage of all of the benefits it has to offer.

In this blog, we’ll discuss limitations with traditional approaches and how Vulkan and ray tracing improve the fidelity and performance of sensor models.

Approaches to Producing Sensor Data:

In a previous blog post, we discussed three main approaches to perception simulation including log resimulation, partially synthetic simulation, and fully synthetic simulation. This post will focus on the area of fully synthetic sensor data, but ray tracing can also apply to partially synthetic approaches.

In computer graphics, there are several common rendering methods that can be used to produce sensor data. We will discuss two of these methods and the importance of the careful selection of appropriate techniques. These approaches are as follows.

- Rasterization is a traditional approach that computes final pixel values from the perspective of a sensor. While it is very efficient and can produce physically accurate results in many cases, it has difficulty with capturing complex effects such as reflections and global illumination. While both of these problems have approximate solutions, there are issues that arise upon close examination either visibly or experimentally.

- Ray tracing is a method of rendering that emulates the way photons bounce around the real world (i.e., bouncing off surfaces in a diffuse/specular fashion). Ray tracing is different from other rendering methods as it allows for rays to bounce multiple times to enable accurate reflections, dynamic global illumination, and other complex effects that cannot be simulated nor approximated accurately with traditional methods. However, it has been extremely slow to execute until recently.

Limitations with Rasterization

The standard rasterization approaches rely on approximations such as cubemaps and screen space effects which may not properly model the underlying physical processes closely.

Cubemap reflections

A cubemap reflection is generated from a single point in space as image data is encoded into textures (cubemaps) for later use. To render a reflection in a camera, the normal of the surface for a given pixel is used to look up the color of the pixel on the cubemap. While this method works well generally, if rendered at any point other than the exact point of capture, the lookup in space is inaccurate and causes the reflection to be in the wrong location. The reflection may look convincing but is not accurate in reality.

Additionally, cubemap reflections may suffer from the lack of resolution when dealing with large curved surfaces, do not include dynamic elements, and are re-computed for every frame (an expensive operation).

Screen space reflections

Screen space reflections work well for objects which are on screen and dynamic and allow for capturing actors that are moving throughout the scene. While the geometry of the reflections is correct, the system has to rely on rendering cubemaps if the location that is to be reflected is off screen. This can cause artifacts at the borders of the image where the screen space reflection data is unavailable, such as the objects behind the rendered view.

Ray Tracing: How It Works

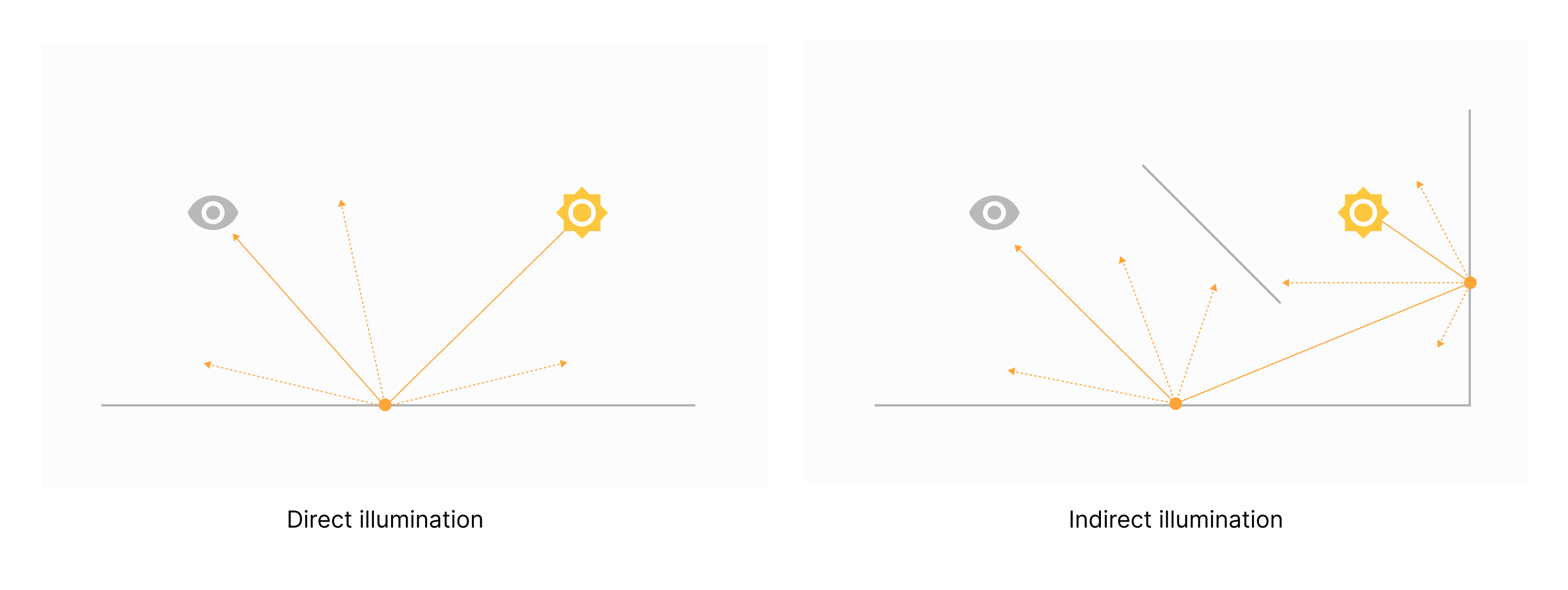

With ray tracing, we trace rays from the lightsource carrying some amount of energy. As the ray traverses the scene from the lightsource to the camera, it interacts with both volumetric models and surfaces in the scene. In Figure 1, we can see an example of how the secondary reflections of scattered light can influence the final scene lighting.

Focusing on the interactions at surface boundaries, a photon/ray obeys the law of conservation of energy, wherein part of its energy is absorbed by the material, part may transmit through, and the remainder scatters into the scene where it may intersect with the sensor view. The specific behavior of how a ray interacts with a surface is defined by the Bidirectional Reflectance Distribution Function (BRDF), which in practice, can vary significantly from depending on material type.

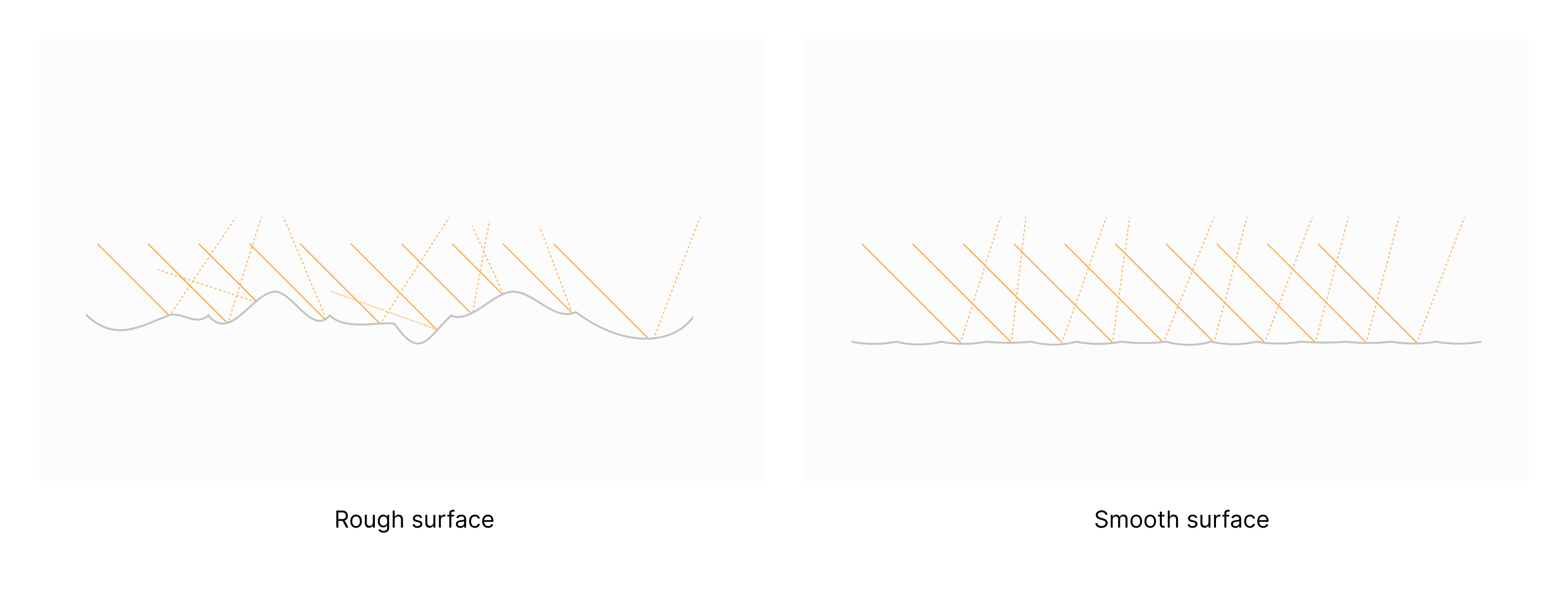

In Figure 2, we can see that the smoother a surface is, the more spatially coherent (or mirror-like) the reflected rays are. This is where ray tracing becomes increasingly important.

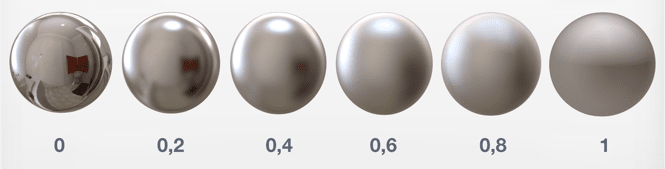

In Figure 3, we see the roughness of a metallic material changing from exhibiting a fully specular reflection to a partially diffuse reflection.

Modeling Accurate Sensors With Ray Tracing

In Figure 4, we can see a qualitative example of a scene with and without ray tracing. We will walk through the differences between the images and why one is superior to the other.

%2520(1).png)

When comparing the overall lighting and reflections, the advantages of ray tracing are clear. On the left (no ray tracing), the tanker truck appears highly reflective and lidar returns will indeed correctly become weaker at shallower grazing angles. But we do not see accurate reflections of the environment (Figure 5):

- With ray tracing, the red vehicle off frame is visible in the reflection.

- With ray tracing, the child obscured by the gas pump is visible in the reflection.

- With ray tracing, the reflection of the white vehicle is rendered properly.

- With ray tracing, the gas station roof support above the tanker is correctly visible instead of appearing stretched.

- Ray tracing shows the correct darkening of the rims that reflect darker surfaces and do not “glow.”

- Ray tracing correctly shows the subtle reflections around the black boxes on the roof.

While this level of gap is an extreme case, the comparison illustrates the benefits of ray tracing for the validation of autonomous driving systems. Ray tracing can also recreate complex issues such as a false vehicle recognition in a reflection (which is otherwise not accurately simulatable).

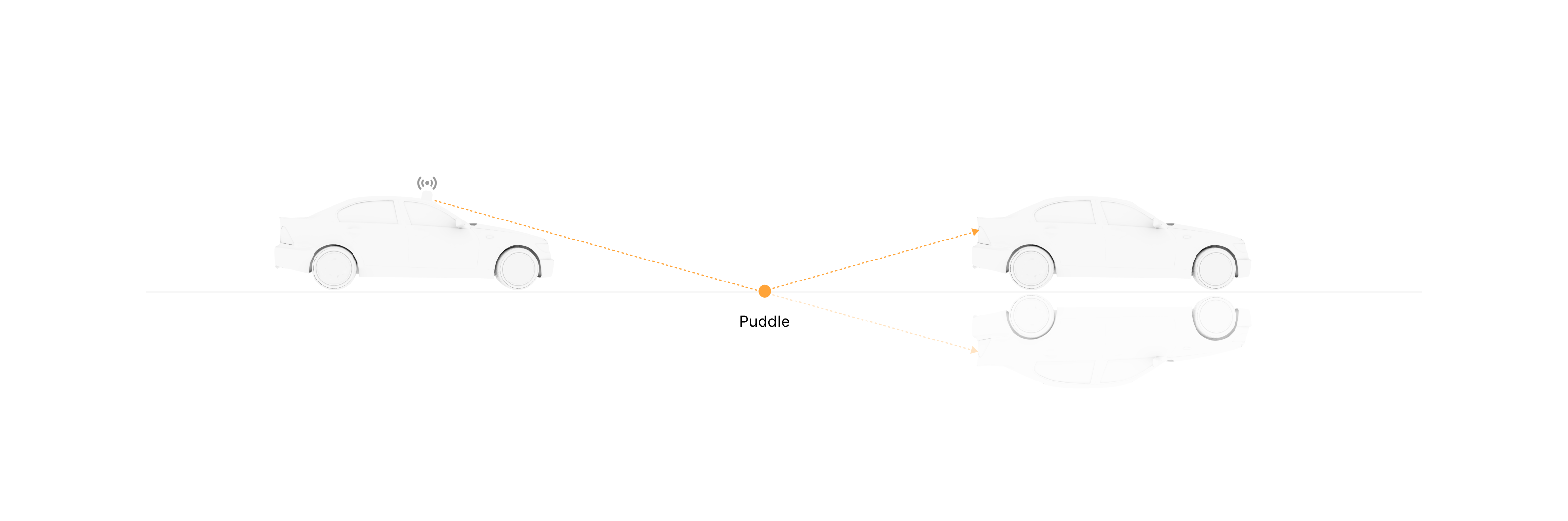

Multipath in active sensors and the generation of ghost targets

The importance of ray tracing is also apparent in the modeling of circumstances which can create ghost detections in active sensing systems. With the right geometry and material properties, radiation from a sensor can take longer than a direct path before returning to the sensor, which results in an apparent elongation in the distance to a surface (Figure 6). This type of effect cannot be modeled directly with traditional rendering techniques (a challenge to verification and validation of the perception systems as discussed previously).

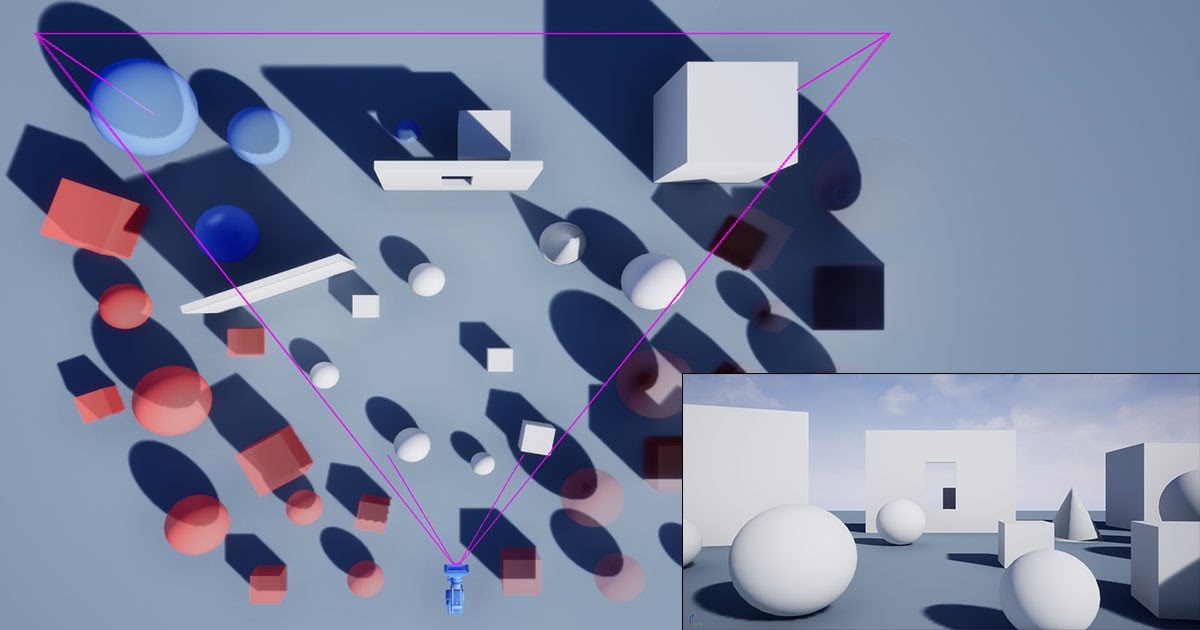

This example also extends to cases in which the subject of a detection is not present in the primary view of a sensor. A common case is secondary or tertiary reflections from objects behind the sensor. This is a challenging case to traditional rendering engines that discards all the geometries that are outside of the sensor vision, occluded by another object, or outside the view frustum. In Figure 7, we can see a great number of potential radiators which would otherwise not be considered in a given calculation.

If ray tracing is a superior rendering method, why is it not widely adopted today? The biggest limiting factor to using ray tracing is the high cost because of its hardware requirements. While ray tracing recently got hardware support, there may be issues with scaling simulations, especially in the cloud. Ray tracing is typically also slower to run than traditional rasterization. Productionizing these capabilities is the state of the art for sensor simulations.

Applied Intuition’s Approach With Vulkan

At Applied Intuition, we’re developing our own custom API for Vulkan to support ray tracing. This will push the state of art for what is possible with ray tracing given the tradeoffs mentioned throughout this post. If you’re interested in learning more about our approach to ray tracing rendering, reach out to our engineering team!

.webp)

.webp)