Measuring performance coverage is critical for understanding the readiness of Automated Driving Systems (ADSs). However, it is challenging to precisely define and measure relevant indicators of readiness. Furthermore, as the autonomous vehicle stack matures towards L4/5 autonomy, the measurement indicators and the methodology may need to be adjusted due to a large number of scenarios. In this post, the Applied Intuition team shares insights into currently used coverage metrics, common challenges, and a domain coverage approach based on ODD that could prove to be helpful.

Building full-scale, commercially-ready systems is a difficult task because an ADS could encounter countless situations in the real world and must safely navigate across this staggering variance. “Coverage” is used broadly to indicate the development progress of ADSs and addresses questions such as “when can I remove safety drivers?” and “how would the ADS perform after a software update?” Across the industry, companies are adjusting their projected autonomous vehicle deployment timelines because it is difficult to know exactly how far they are from deployment if they cannot quantify domain coverage or the speed of progress towards their coverage goals. There is an urgent need for shared industry knowledge on this topic.

As there is no generally accepted definition of “coverage,” quantifying coverage is a challenge. Coverage needs to encompass many dimensions such as safety, usefulness, types of situations, and types of traffic participants. More companies developing ADSs are publishing Voluntary Safety Self-Assessments (VSSA) to assess the commercial readiness of their autonomous systems. Yet, federal standards such as FMVSS have not been updated to reflect advancing Level 4+ autonomy technology. As a result, there is a growing divergence in the current methodologies to measure coverage across the industry. Furthermore, the definition varies across application domains (e.g. long haul autonomous trucks vs. sidewalk delivery robots) and the maturity of the autonomous vehicle stack (e.g. initial real-world tests in fenced-off areas or perfecting unprotected left turns in a business district).

Code, Requirements, and Scenario Coverage

In software engineering, coverage is commonly understood and defined as the degree to which the source code of a program is executed by a set of automated unit or integration tests. It is an inward-looking measure that quantifies how thoroughly the developers are automatically guarding against regressions.

Requirements coverage measures how many of the functional and performance requirements are met by an ADS. It is a key metric that, with the right tooling, allows a program to track how requirements translate to tests. Many tools have been traditionally used in the industry to track and test these requirements. The biggest assumption here is that a user has captured the right set of requirements to comply with functional safety standards, such as ISO 26262, and has precisely defined the system functions in all situations ahead of time.

Scenario coverage measures how many pre-defined scenarios could be navigated by an autonomous system. This type of coverage could incorporate various considerations such as maps, applications, and specific software version goals. Similar to requirements coverage, scenario coverage is relatively easy to put in place but assumes that the right things are being tested. With adequate tooling to express scenarios (including parameter variations) and explore the space of combinations, scenario coverage could be a strong driver for ADS software quality improvements.

Challenges With Requirements and Scenario Coverage Approaches

However, if used incorrectly, requirements and scenario coverage metrics may create a false sense of security. The challenges come from the sheer number of requirements and scenarios that need to be considered and the complexity of the real world and the ADS. The reactions of other traffic participants to the actions of the autonomous system create a combinatorial explosion of variants that need to be handled. Even if the ADS performs well in an isolated scenario (e.g. decelerating in front of a jaywalking pedestrian on an empty road), the number of potential corner cases that arise from interactions between agents in the scenario is staggering.

To illustrate this, let’s consider a specific example of a left-turn scenario. The system could be evaluated on a range of vehicle parameter variations such as turn angle, speed, and braking in front of pedestrians crossing the street. There are also various types of pedestrians and other Vulnerable Road Users (VRUs) that might be in front of the ADS. The system needs to handle various types, numbers, and reaction behaviors of the VRUs. The timing of when each VRU is perceived by the ADS is also critical since it may introduce new corner cases. On top of this, each intersection in a map has a different geometry and the variations need to be tested based on configurable parameters. An example of a variation is shown in Figure 1.

This explosion of possible scenarios is well known as the ‘curse of dimensionality’ in traditional mathematics because each new parameter roughly leads to an exponential growth in the total number of tests that need to be run to sample the space completely. A few approaches exist to resolve this but they generally don’t apply directly to ADSs. One common approach for continuous phenomena is to sample a smaller subsection of the overall domain to understand the targeted attributes of the system. However, ADS failures are inherently non-continuous and there is no mathematical proof of coverage. Another approach is to reduce the number of dimensions through derived mathematical relations, but most parameters in ADS scenarios are independent of one another. As a result, the best practices for sampling parameter variations effectively do not exist to date.

Adding to the complexity, previously unforeseen requirements need to be integrated with an existing test suite, further increasing the number of variations. Many driving situations are combinations of requirements and interacting behaviors. While the SOTIF functional safety standard attempts to address these challenges through broader risk assessment, a more systematic approach is needed for coverage measurement. All of these challenges together have led to a lack of consensus on common metrics for ADS coverage.

Moving Towards Domain Coverage

Given the need for a common coverage definition and the limitations of current approaches, a more comprehensive approach, termed domain coverage, should be available for the industry. Domain coverage is measured directly on the fundamental operational design domain (ODD). The main focus is to link the ODD into the evolving requirements and test scenario definitions so that a program could measure if ADSs are ready for deployment in the domain in question. Some of these requirements are global (e.g. don't hit pedestrians) while others are scoped for specific sets of scenarios (especially for regression tests or discovering corner cases for AV sub-systems). These requirements and scenarios should be independent of a particular location in a map (e.g., an intersection) or a specific city as long as they are not constrained by ODDs. Finally, the measurement should include results from both real-world and simulated tests and be tied to the metrics and tests as fast as possible in an automated manner.

Domain coverage could be thought of as a tailored ‘driving test’ based on the system’s purpose in an ODD. For a given attribute of the ODD, industry players and regulators could then define more precise requirements and test scenarios. The required coverage would be a sum of all the individual elements of the operational design coverage.

While this approach would help with standardizing meaningful coverage measurements, several challenges remain:

- This approach still needs to integrate both simulation and drive data performances that are difficult to be mapped onto ODDs automatically.

- ODD parameters across different industry domains and geographies need to be defined precisely. It is unclear who would be driving this process today.

- The pass/fail conditions on each test need to express requirements at higher levels of abstraction (e.g., metrics such as the minimum distance between the host vehicle and another agent) but they are too specific to be included in domain requirements.

- Various methods and tools are required to keep measurements tractable across the scale of software and configuration changes.

Using Tools to Start Understanding the Real World Coverage

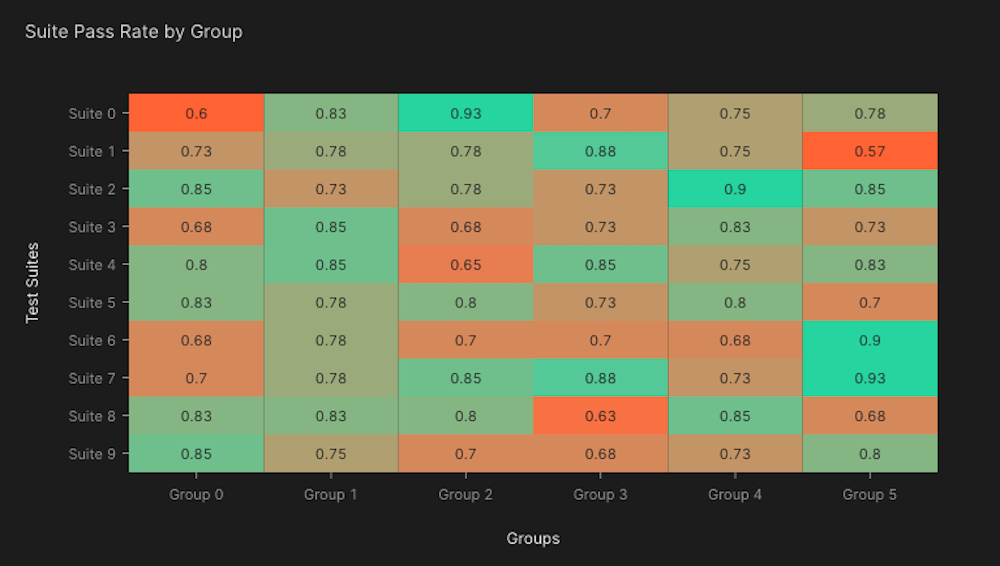

Despite the limitations of commonly used and potential new approaches, current tools could still have a significant impact on helping ADS developers with understanding how much of the known space has been sampled. Specifically, linking functional safety requirements to scenario coverage is critical to illustrate domain coverage based on scenario test suites. A sample view is shown in Figure 2, where scenario coverage is seen across different requirement dimensions. Applied Intuition, in collaboration with industry partners, has built robust analytics and visualization tools to support developers with measuring coverage and progress towards their autonomy goals.

Conclusion

While the autonomous industry urgently needs a way to measure coverage to assess whether technology is ready to deploy, current approaches are not sufficient. An approach based on ODD fundamentals would be ideal but technical challenges remain. In the meantime, the right tools could enable developers to identify the next focus areas to get to their autonomy goals faster. Applied Intuition supports key workflows for ADS teams worldwide by providing tools and infrastructure built with domain expertise and years of experience in real-world autonomy development.

Do you want to learn more about domain coverage? Schedule time with our engineers!

.webp)

.webp)