During early testing, advanced driver-assistance systems (ADAS) and autonomous vehicle (AV) engineering teams are able to primarily use synthetic simulation to test their automated driving systems. As ADAS and AV systems mature, more on-road testing becomes necessary to verify that the vehicle (ego) can safely handle an enormously large amount of possible events that might occur in its operational design domain (ODD).

During on-road testing, the ego may encounter disengagements (i.e., events where the safety driver disengages the system and takes over control of the vehicle). ADAS and AV engineers commonly use open-loop log replay tools to play back disengagement events, see why the safety driver intervened, and fix issues in the localization or perception stack before further pursuing on-road tests. However, open-loop log replay tools are unable to determine if a disengagement was actually necessary or the ego could have avoided a crash if the safety driver didn’t intervene. They are also unable to show how varying parameters (e.g., a pedestrian on the road or worse visibility due to different weather conditions) would have affected an event’s outcome.

This blog post discusses how open-loop log replay and re-simulation help evaluate the performance of perception and localization systems (i.e., ‘what happened’) and motion planning and control systems (i.e., ‘what would have happened’), respectively, to distinguish between necessary and unnecessary disengagements and comprehensively verify and validate the full AV stack.

Open-Loop Log Replay: Explore ‘What Happened’

Today, the most common type of log replay is open-loop. Open-loop log replay offers the ability to play back recorded drive data to find issues, analyze what happened, and evaluate improvements.

For example, let’s assume an on-road test where the ego approaches the end of a traffic jam and the safety driver intervenes to stop the ego and avoid a collision (Figure 1).

Open-loop log replay can be used to:

- Analyze what happened: Replay the drive log to visualize why the safety driver intervened. In our example, we can see that the driver intervened because the ego is approaching a stopped vehicle at a high speed.

- Evaluate localization and perception performance: Replay the raw sensor data from the event and rate the performance by comparing stack outputs to manually labeled ground truth.

- Explore solutions: Make improvements to the localization and perception systems before conducting further on-road tests. Improvements to the localization stack can be tested by re-running the event using the updated stack version.

Even though open-loop log replay allows engineers to play back and analyze disengagements, its approach is limited to evaluating localization and perception stack performance. As open-loop log replay is unable to respond to differing stack behavior, it cannot evaluate how motion planning and control systems would have behaved if the safety driver hadn’t intervened.

Re-Simulation: Explore ‘What Would Have Happened,’ ‘What Should Have Happened,’ and ‘What Might Have Happened’

Closed-loop log replay, or re-simulation, is a log replay approach that alleviates the limitations of open-loop log replay. Re-simulation allows development teams to recreate a logged, real-world drive scene and alter it using simulation.

Re-simulation can be used to:

- Analyze and triage: See what would have happened if the driver hadn’t intervened to determine if the disengagement was necessary and how severe the situation would have been. Re-simulation tools achieve this by performing a closed-loop replay that responds to stack outputs. The re-simulation results include numerical metrics and observers (i.e., pass/fail rules) to measure the stack’s performance and determine whether or not the event was a real issue. In our example, the disengagement was indeed necessary because, without it, the ego would have caused a collision (Figure 2).

- Fix root-cause issues: Determine what should have happened to avoid necessary disengagements by running the motion planning and control stack on the recorded event. Use the re-simulation results to identify the root cause of the issue and develop a solution. Once a fix is in place, re-run the re-simulation with the improved stack to confirm that the problem is addressed.

- Augment logged data: See what might have happened in different variations of the same scenario to evaluate the performance of the entire stack and increase coverage of long-tail events. Engineers can add or remove actors (e.g., a pedestrian on the road), modify actors’ behaviors (e.g., increase the ego’s or another vehicle’s speed), change other parameters of the scene (e.g., rain or time of day), inject faults to the AV software or hardware (e.g., a sensor restarting, a subsystem temporarily going offline, or faulty sensor hardware), or add noise to sensor data (e.g., noisy lidar data). Figure 3 shows an example of modifying actor behavior by replacing a logged actor with a synthetic actor whose behavior is editable through a set of waypoints.

Re-simulation architecture

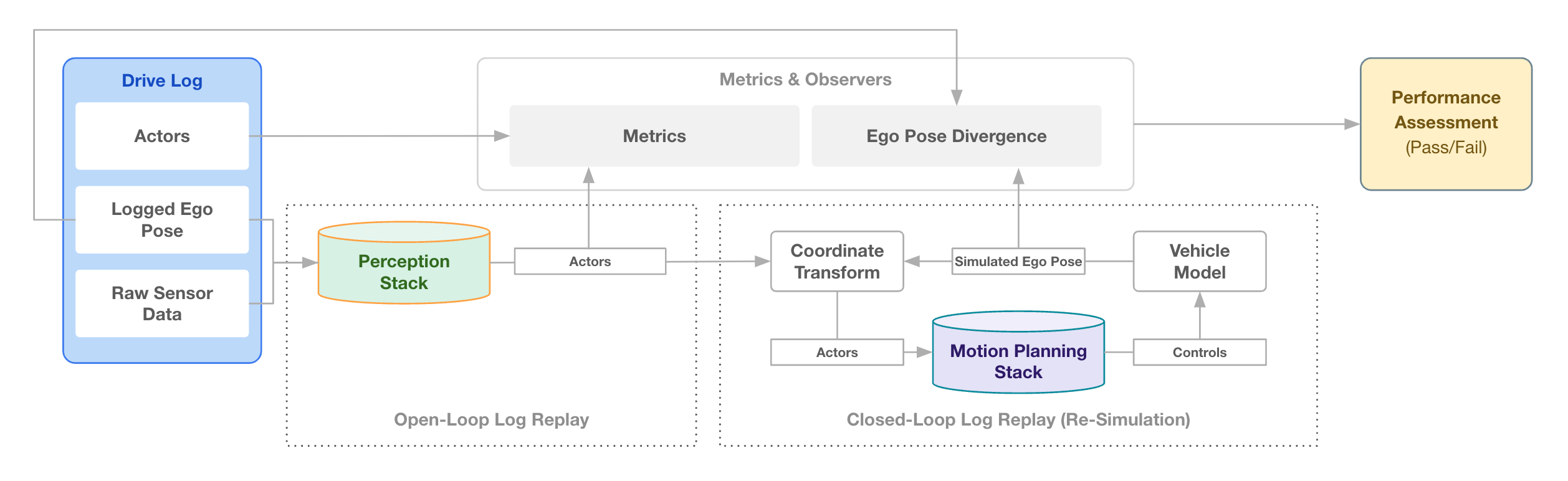

A typical architecture of a re-simulation tool looks as follows (Figure 4):

First, raw sensor data is fed into the perception stack in an open-loop log replay and the detected actors are extracted. Then, the motion planning stack runs in a closed loop. To do this successfully, the outputs of the perception stack need to be modified to account for ego divergence. In a drive log, detected actors may be reported relatively to the ego. To get from the open-loop reference frame to the re-simulation reference frame, different actor positions thus need to be adjusted to align with the simulated ego pose (coordinate transform). Throughout the entire process, the metrics and observers framework collects signals to compute an evaluation of the ego’s performance (pass/fail).

Technical challenges of re-simulation

Re-simulation comes with technical challenges, which can result in costly failures that engineers need to manually investigate and fix.

First, triage and engineering teams need to be able to trust that a re-simulation is accurate and reproducible. This can be validated by running re-simulations on log sections without a disengagement and confirming that the ego divergence is small.

Second, if the stack is running on non-vehicle hardware, it can fall behind re-simulation. This is particularly problematic when re-simulations run in the cloud, where machines are significantly less powerful. If the stack falls behind, it might react to events with a delay. The resulting ego performance will be inaccurate and non-deterministic (i.e., vary every time the re-simulation runs). Re-simulation tools need to prevent this to make results meaningful and reproducible across different machines.

Applied Intuition’s Approach

Both open-loop log replay and closed-loop re-simulation are necessary to comprehensively evaluate the on-road performance of an AV stack. Open-loop log replay allows engineers to explore what happened during disengagements and evaluate localization and perception stack performance. Re-simulation enables them to distinguish between necessary and unnecessary disengagements and fix root-cause issues in the motion planning and control stack. Together, the two approaches help development teams verify and validate their entire AV stack and bring safe autonomous systems to market faster.

Applied Intuition’s re-simulation tool Log Sim* enables both open-loop and closed-loop log replay. If you are interested in how Log Sim works, contact our engineering team for a product demo.

*Note: Log Sim was previously known as Logstream.

.webp)

.webp)